MedGPT – Could we revolutionise healthcare?

My thoughts on how we can use AI to super charge the Healthcare Industry.

👋 Hey, I’m Linus and welcome to a 🔒 subscriber-only edition 🔒 of my weekly newsletter. Each week I write about all things AI. I cover your questions, write op-eds and create AI tutorials.

Welcome to all new readers ✨

A big thank you to all the new subscribers, I can’t understand that there are ~13.000 of you now. Extremely humbled by that. I appreciate you. Thanks for reading.

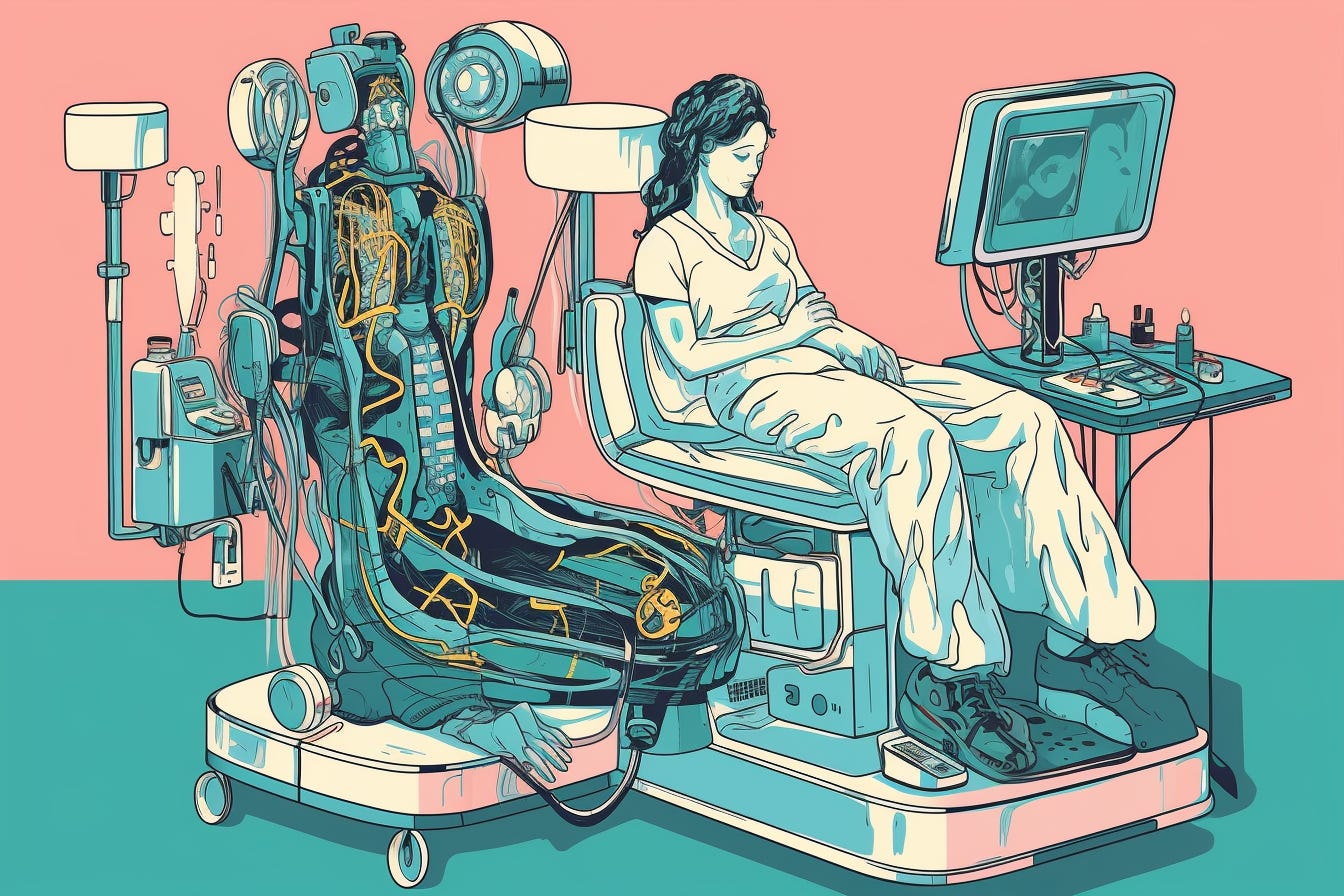

MedGPT - A proposal on how we can super charge the healthcare industry with an AI diagnosis agent.

It's a well known problem that doctors and nurses all around the world are in short supply. Education is long, pay and hours are bad. Yet, somehow hospitals are serving patients and the healthcare heroes are saving lives everyday. We can do better.

I’ve recently come across this medium article. Where Dr Josh Tamayo-Sarver, MD, PhD struggles to get ChatGPT to help him in the ER. More so, he makes some rather uneducated statements that I feel are dangerous. This got me thinking.

If we can train an LLM to be hyper specific on simply doing initial patient diagnosis based on as much information as possible, we could have one of the first true smart AI systems to remove pressure from the healthcare industry's bottlenecks.

Let’s try to put this in layman's terms, as simple as possible. The LLM that we want to train, let’s call it MedGPT. Simple.

Let’s imagine MedGPT trained to be as smart as GPT4, but fine-tuned and purposefully aligned to do medical diagnosis. We can do this, by importing and synthetically creating hundreds of millions of medical cases, and have the model train on that. Second layer, add RLHF (Reinforcement Learning from Human Feedback). The aligners should be doctors with a lot of expertise. This should give us a super aligned model for medical diagnosis purposes.

We can assume the model to be truly multimodal (as GPT4) with the ability to interact using natural language as well as consume images or perhaps even videos as input and then have the ability to properly analyse what it sees.

So let’s imagine that we now have a custom trained MedGPT, same base capacity as GPT4, but with the added context of being an absolute specialist on performing medical diagnosis.

I can easily imagine a system, maybe based on the AutoGPT paradigm, where the MedGPT gets an objective to go figure out what's wrong with a patient.

It’s given the patient's entire medical history by default as long term context. On top of that it’s getting the latest real-time information that has been gathered at the point of interaction, either at home in a proactive manner, or at the medical facility in a reactive/proactive scenario. This can include self-assessment, Apple Health data for a specific time period, or your entire recorded Apple Health data. It can include images, video and audio too.

We can imagine the model being either multi-modal (as mentioned above), or having a huggingGPT like router, that can patch in any specific model to complete a goal/task. If it needs to analyse a chest x-ray, it uses a specific model for that. Or if it needs to listen to an audio recording of a heart, it uses another model. Maybe it needs to make an assessment on an ultrasound, it connects a different model, you get the point (all of this would be seamless and behind the scenes for the practitioner or patient).

We can look at the initial model of MedGPT as the first line of defence. and then the subsequent models or layers acting as deeper knowledge specialists. The further out in the root system you go, the more specific the models and analysis can be made. At the centre we can see some sort of dispatcher that communicates back and forths between the different models. Much like huggingGPT.

From the perspective of the medical professional the interface might be even simpler than ChatGPT. It might just be ONE additional button in an existing digital dashboard. The button initiates a complete cascading flow of events, similar to how AutoGPT takes just a few parameters and then starts executing to finish a desired goal/task.

From a patient's perspective there might just be a simple self-check conversation similar to ChatGPT. Once the model feels confident that it has enough information to proceed, it provides the patient with a way to proceed like a button or a prompt asking for approval to start.

The output of the combined efforts of the different models could then be as simple as “Everything is fine. You are healthy, and should not seek medical attention” to something like this: “this patient has a stroke and needs emergency treatment”. For example every year tens of thousands of patients are misdiagnosed for stroke and sepsis.

I want to be clear, my level of understanding in the field of AI is limited, I’m not a PhD, I’m not a MD, I’m not a researcher. I’m just a person that likes connecting the dots, and looking at the big picture.

Without AI, this proposed system would never work, or it would take too long to even start building something. But due to the innate ability for a LLM like GPT4 to take unstructured data and make sense of it, I can see how it could easily traverse different systems, data structures, and communicate back and forth between existing systems without much friction, if any at all.

I’m currently not aware of anyone building something like this, and I’m not myself doing so. But I do understand that the implications of a system like this could be profound.

Would love for someone that knows more than me to tell me this can or can’t be done. In my mind, it all seems very doable, and the incentives are clearly there!

This was a note from Inside my Head

/Linus Ekenstam, March 15th 2023

* Disclaimer, MedGPT does not actually exist, it’s a made up idea, its up for grabs if you want it.

New noteworthy AI tools

👉 Concise.app - Daily Newsfeed Summary, Concise is the new way to read news. Get the latest news summary from tech, startup, science to economics - It’s Free

And a few more bonus tools.

🪄 Pico AI Copilot - Unleash Your Inner Maverick with Pico

🧠 Consensus.app - Evidence-Based Answers, Faster. Ask anything and get your answer with summaries of the top 10 research papers cited.

💬 Chatbase.co - Chat with any document or website.

I’m doing a Webinar/workshop for companies

On April 24th, at 7pm GMT+1 I’m hosting a webinar/workshop for companies that are interested in learning more about how to leverage Midjourney in their business. Only a few seats left. First come, first served.

Still here?

If you are still here, thank you. I hope you enjoyed this note on how I think AI can revolutionise the healthcare industry.. If you have questions, feel free to reply to this email or reach out to me on Twitter or reply/comment here on Substack.

Newsletter Sponsor opportunity

If you want to sponsor future newsletters, you can find more information about that here. 🙏🏻

I am working in the Software as a Medical Device (SaMD). Software / AI is heavily regulated already since 3 yrs. In US 182 AI models are approved and in EU so far 52, some of which I certified for customers I consulted. In VERY short words, you need to have a lot of documentation ready, a valid QMS for developement, verification and validation. You need to have unbiased and evaluated (diverse) clinical training data and then for the validation of your trained LLM a SECOND independent Data Set with independent assessment (usually by doctors). You then need to have a study and test it in the wild with predefined expected outcomes and trust levels. Many companies are currently working on it or are in certification. My current assignment we got Class IIb EU MDR (the first company) which allows using algorithms for any kind of disease and patient condition and treat or diagnose for up to serious condition patients.

I am happy to guide everybody through it. For a start, pls. Google „MDCG2019-11“

Rudolf (Quality and Regulatory affairs expert ;-) )

Isn't that just like Med-PaLM 2 Google is working on? In my opinion a medical LLM is the most complicated as a single hallucination or wrong answer could potentially kill a person.