Pause All Giant AI Experiments

3 days ago, +1000 individuals, corporate leaders, AI researchers and notable members of the AI industry signed a petition to stop training any AI more powerful than GPT4.

Some of the noteworthy individuals that has signed the petition are Yoshua Bengio, Elon Musk, Steve Wozniak, Yuval Noah Harari, Andrew Yang, Emad Mostaque, Max Tegmark, Gary Marcus, Julien Billot and Nick Bostrom

I want to look beyond the petition and delve a bit into the history of AI. I'll share conversations and interviews that are available for you to explore if you are interested in this topic. Let’s dive in.

TLDR;

Many people want AI development to slow down and allow time for us, mankind, to adapt. This would enable us to consider the potential impacts and risks that come with these new and powerful (AI) tools.

Part 1 - The past

If we want to understand what's really going on, we need to immerse ourselves fully in the problem. It starts earlier than here, but the book Super Intelligence by Nick Bostrom (link is to Amazon so you can buy the book) marks a good point in time to start. Although we have 30+ years of science fiction and AI philosophy to sift through, I'll leave that for another day. Below is also Nick's now-famous TED talk on what happens when our computers get smarter than us.

The next thing that might be interesting to consume to better understand what's going on is this 3-hour! Lex Fridman interview with Max Tegmark. If you want to listen to more Max Tegmark, you can also tune in to the very first episode of the Lex Fridman Podcast.

It's hard not to include a talk with Ray Kurzweil. This is not meant to be a Lex newsletter, but there are not many good interviews with this type of length and depth with Ray.

Part 2 - Today

So if you have grounded yourself with these two pieces of information, we can move on to more recent interviews. The first one is with Ilya Sutskever, the Chief Scientist and Co-Founder at OpenAI.

The second interview is again with Lex Fridman but this time with Sam Altman, CEO and Co-Founder of OpenAI. There is also a very good read from Sam Altman that he wrote 8 years ago. It comes in two parts part 1 and part 2.

I'm fully aware that the above content is 6.5h long and that many of you might just want the short version. However, if you really want to understand the underlying worries and also where we are right now in the capabilities of GPT-4 and what the founders of OpenAI themselves say about risk, then I can't stress enough how important it is to listen or watch these interviews.

Yesterday Gary Marcus posted this and later this on his own Substack 👇

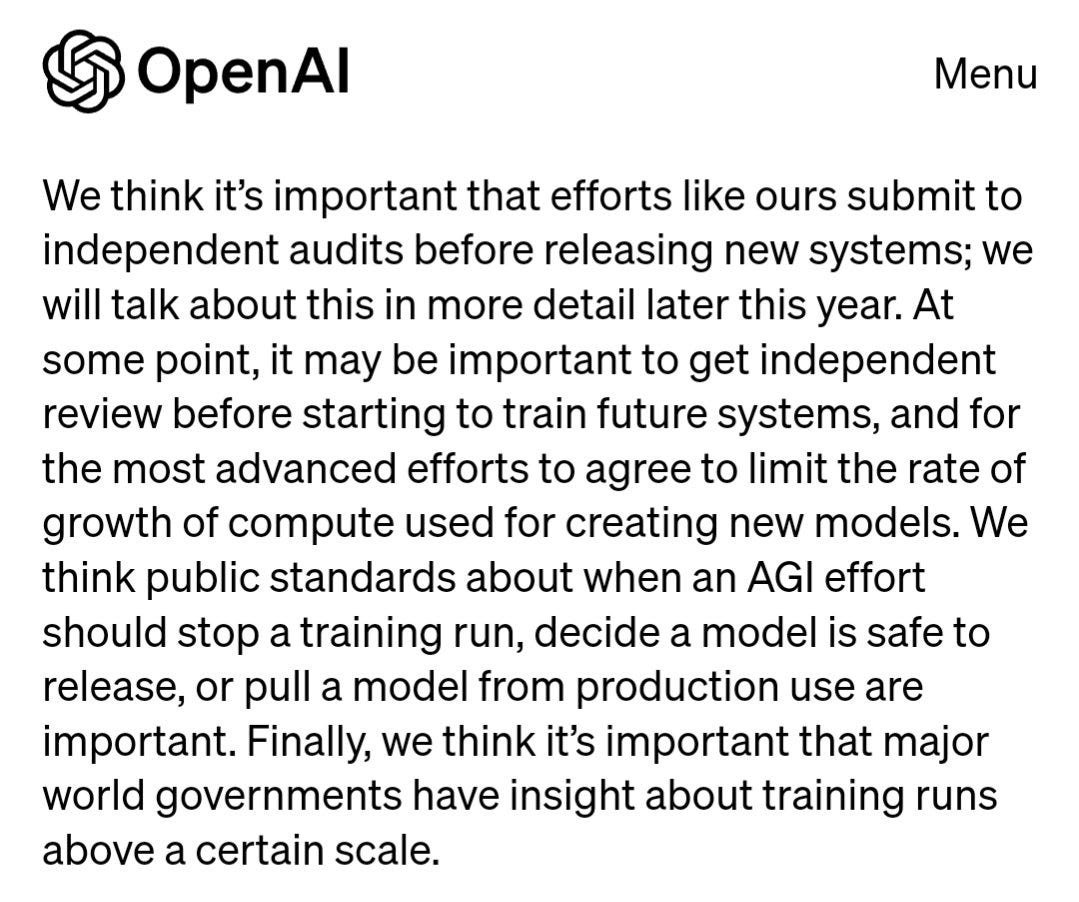

What does OpenAI say about all of this?

So it’s not like OpenAI is not very vocal about their own concerns, they have an entire post that can be read here. It’s almost required to have read that post to understand the internal standpoint of OpenAI on this. I’ll include a small excerpt below. But to truly understand the entire scope, you need to read more.

Other AI companies on Safety Concerns – Anthropic

While we are on the topic of AI safety, I think it’s also important to read this long post from Anthropic that touches on a lot of really important matters. Here are their current safety research areas.

Mechanistic Interpretability

Scalable Oversight

Process-Oriented Learning

Understanding Generalization

Testing for Dangerous Failure Modes

Societal Impacts and Evaluations

Conclusions

I personally want to stay optimistic about all these new AI tools and advancements. But I have huge respect for the concerns, and I think we should tread lightly into this new era. We should have these hard conversations and talk through the pros and cons. Maybe even pausing training on the next LLM before we have figured out some of the boundaries or regulation that we might need to have in place before we continue.

Still here?

If you are still here, thank you. I hope you enjoyed this deep dive on AI. If you have questions, feel free to comment on Substack, reply to this email or reach out to me on Twitter would love to chat.

Fabulous resource buddy. Thanks.

Thanks for the article. The most terrible part of this idea is potentially delaying the solutions to huge problems that AI could actually help with. https://metavert.substack.com/p/existential-threats-and-artificial